Hyperplane of angle-oriented image recognition.

#Hyperplan separateur update

Future work to be undertaken accordingly includes developing a framework not only automatically update classifiers, but also monitor and measure the progressive changes of the process, in order to detect abnormal process behaviours related to drifting terms.įigure 8.5. Dealing with effects of missing and outlier samples on the mentioned methods should be investigated in another study. In this study, it is supposed that there are no missing or outlier samples in datasets for training, testing and incremental learning of the classifier. HD-SVM by improving mechanism of selecting samples covers weakness of TIL for keeping information. It has shown, using HD-SVM reduce exceptionally the training time of the classifier compared with NIL (1/10), while increases the accuracy of the classifier (1.1 %), compared with TIL. In this study HD-SVM algorithm is implemented and comparison of HD-SVM, TIL and NIL is done for process FDD. By considering these samples, losing of information by discarding samples significantly reduces.

#Hyperplan separateur plus

In HD-SVM incremental learning algorithm, plus samples violate KTT conditions, samples which satisfy the KTT conditions are added into incremental learning. Antonio Espuña, in Computer Aided Chemical Engineering, 2016 4 Conclusions Nondifferentiable and two-level mathematical programming.

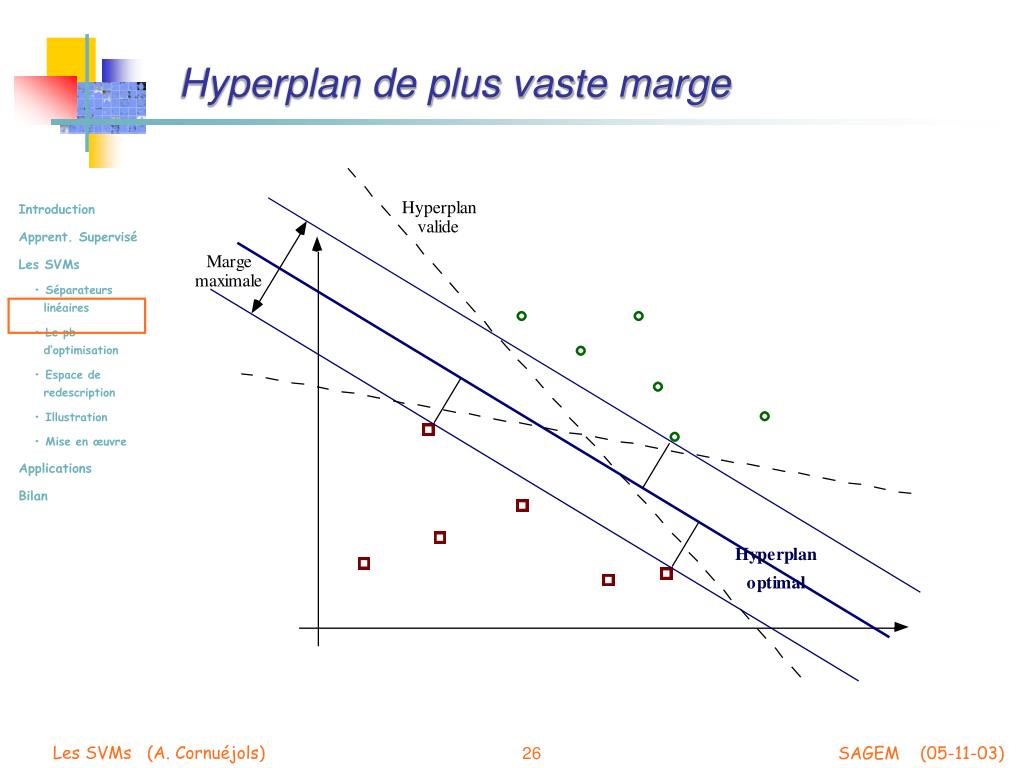

For example, A can be a closed square and B can be an open square that touches A. (Although, by an instance of the second theorem, there is a hyperplane that separates their interiors.) Another type of counterexample has A compact and B open. In the context of support-vector machines, the optimally separating hyperplane or maximum-margin hyperplane is a hyperplane which separates two convex hulls of points and is equidistant from the two. The Hahn–Banach separation theorem generalizes the result to topological vector spaces.Ī related result is the supporting hyperplane theorem.

The hyperplane separation theorem is due to Hermann Minkowski. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal projections of the convex bodies onto the axis are disjoint. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. In one version of the theorem, if both these sets are closed and at least one of them is compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. There are several rather similar versions. In geometry, the hyperplane separation theorem is a theorem about disjoint convex sets in n-dimensional Euclidean space.

0 kommentar(er)

0 kommentar(er)